Have a Merry Christmas and a Happy New year!

Open Access Journal of Biogeneric Science and Research (JBGSR)

Our main dictum of establishing Our Open Access Journal of BGSR is it’s an unique platform which cover all scientific subjects that provides imperative information for all research communities. We at Biogeneric sciences are an open access platform including peer-reviewed Journal articles like Research, Reviews, Conceptual Papers, Case Reports, PPT in seminars, and more effective video articles for easy sympathetic and cramming for all educated & untutored.

Have a Merry Christmas and a Happy New year!

An Internet of Things and Machine Learning Based System to Measures Precursors of Epileptic Seizures by Bahman Zohuri in Open Access Journal of Biogeneric Science and Research (JBGSR)

Abstract

Artificial intelligence is a new phenomenon that has occupied a prominent place in our present lives. Its presence in almost any industry that deals with any huge sheer volume of data are taking advantage of AI by integrating it into its day-to-day operation. AI has predictive power based on its data analytic functionality and some levels of autonomous learning, which its raw ingredient is just the massive sheer volume of data. Artificial intelligence is about extracting value from data, which has become the core business value when insight can be extracted. AI has various fundamental applications. This technology can be applied to many different sectors and industries. There has been a tremendous use of artificial intelligence in Nanotechnology research during the last decades. Convergence between artificial intelligence and Nanotechnology can shape the path for various technological developments and a large variety of disciplines. In this short communication, we present such innovative and dynamic sites utilizing artificial intelligence and its sub-sets of machine learning driven by deep learning in Nanotechnology.

Keywords: Artificial Intelligence; Machine Learning; Deep Learning; Nanoscience; Nanotechnology; Atomic Force Microscopy; Simulations, Nano computing

Abbreviations: AI: Artificial Intelligence; AFM: Atomic Force Microscope; STM: Scanning Tunneling Microscope; ML: Machine Learning; DL: Deep Learning; BMI: Brain Machine Interfaces; EEG: Electroencephalograph; HPC: High-Power Computing; PSPD: Position-Sensitive Photodiode; IoT: Internet of Things; TCO: Total Cost of Ownership; ROI: Return on Investment

When Richard Feynman (Figure 1), an American Physicist and winner of Nobel prize and physics professor at California Institute Technology (CalTech), gave a talk under the title of “There is Plenty of Room at the Bottom” [1] at an American Physical Society (APS) meeting during December 29th, 1959, at CalTech, California, the door to ideas and concepts behind nanoscience and nanotechnology, just got opened. Of course, this talk was way before the term nanotechnology was used in our ordinary daily English language.

In his talk, Feynman described a process in which scientists would manipulate and control individual atoms and molecules. Over a decade later, in his explorations of ultraprecision machining, Professor Norio Taniguchi coined the term nanotechnology. It was not until 1981, with the development of the scanning tunneling microscope that could "see" individual atoms, that modern nanotechnology began.

Anytime, these days, when talking about nanotechnology, we should think about how small things can be, such as an atom of any element or from a scaling point of view, what would be the “Size of the Nanoscale” or basically, just how small is “nano?” and what can we imagine about the scale of from Microscopic perspective. From metric MKS unit dimensional point of view or International System Units (ISU), the prefix “nano” means one-billionth or 10-9; therefore, one nanometer is one-billionth of a meter. It’s difficult to imagine just how small that is, thus, here we are presenting some examples to clear the matter better [2].

1. A sheet of paper is about 100,000 nanometers thick.

2. A human hair is approximately 80,000- 100,000 nanometers wide.

3. A single gold atom is about a third of a nanometer in diameter.

4. On a comparative scale, if a marble diameter were one nanometer, then the diameter of the Earth would be about one meter.

5. One nanometer is about as long as your fingernail grows in one second.

6. A strand of human DNA is 2.5 nanometers in diameter.

7. There are 25,400,000 nanometers in one inch.

The illustration in (Figure 2) has three visual examples of the size and the scale of nanotechnology, showing just how small things at the nanoscale are. Nanoscience and nanotechnology involve the ability to see and to control individual atoms and molecules. Everything on Earth is made up of atoms - the food we eat, the clothes we wear, the buildings and houses we live in, and our bodies. But something as small as an atom is impossible to see with the naked eye. In fact, it’s impossible to see with the microscopes typically used in a high school science class. The microscopes needed to see things at the nanoscale were invented in the early 1980s.

Once scientists had the right tools, such as the Scanning Tunneling Microscope (STM) and the Atomic Force Microscope (AFM), the age of nanotechnology was born. Although modern nanoscience and nanotechnology are quite new, nanoscale materials were used for centuries. Alternate-sized gold and silver particles created colors in medieval churches' stained-glass windows hundreds of years ago. The artists back then did not know that the process they used to create these beautiful works of art actually led to changes in the composition of the materials they were working with.

Today's scientists and engineers are finding a wide variety of ways to deliberately make materials at the nanoscale to take advantage of their enhanced properties such as higher strength, lighter weight, increased control of light spectrum, and greater chemical reactivity than their larger-scale counterparts [3].

Figure1: Professor Richard Feynman.

Figure 2: The Scale of Things [2].

Bottom line, Nanotechnology is the use of matter on an atomic, molecular, and supramolecular scale for industrial purposes, and it is the design, production, and application of structures, devices, and systems by manipulation of size and shape at the nanometer scale. Thus, when it comes to nanoscience and nanotechnology, we can say that when and where, we are dealing with smallest scale size, “Small is Powerful”.

However, when we are dealing with small, in particular at the scale of atom size, then for the purpose of all practical situations and applications, in particular in field medicine, we are encountering, with share volume of data that we need to collect and be able to analyze these data to the point of real-time speed. So requires a lot of data analytics and data mining, where such practice is beyond human capacities. With Artificial Intelligence (AI) technology and its technical thrives in the past decade, we can turn to this partner for help. By now and today’s standard of AI implementations, practically cross an industry, we know that AI and human brain complement each other at evMKSery level of business [4].

For example, in biomedical, using artificial intelligence for drug discovery is the practice of using computational methods to research new pharmaceuticals, and repurpose existing compounds for new use cases is a common practice for drug discovery. Drug Discovery with Artificial Intelligence (AI) and its two sub-components, namely Machine Learning (ML) and Deep Learning (DL) can help to improve productivity and ensure regulatory compliance, transform data at the speed of your computer Central Processing Unit (CPU), digital at scale and speed (i.e., nanotechnology), optimizing your business.

Since today we encounter with the fast-paced and share volume of data in our day-to-day operation, Artificial Intelligence (AI) is already changing the way we think and operate. But what is the full potential of this technology, and how best can you realize it? In the field of medicine, integrating artificial intelligence and nanotechnology enhances better precision cancer medicine. Artificial intelligence (AI) and nanotechnology are two fields that are instrumental in realizing the goal of precision medicine—tailoring the best treatment for each cancer patient.

A confluence of technological capabilities is creating an opportunity for machine learning and Artificial Intelligence (AI) to enable “smart” nanoengineered Brain Machine Interfaces (BMI). This new generation of technologies will be able to communicate with the brain in ways that support contextual learning and adaptation to changing functional requirements. This applies to both invasive technologies aimed at restoring neurological function, as in the case of the neural prosthesis, as well as non-invasive technologies enabled by signals such as electroencephalograph (EEG).

Advances in computation, hardware, and algorithms that learn and adapt in a contextually dependent way will be able to leverage the capabilities that nanoengineering offers the design and functionality of BMI.

These are a few examples that Artificial Intelligence and Nanoscience and Nanotechnology can shake a hand and augment each other very well. In the next few sections of this short paper, we try exploring this opportunity and collaboration between these two technologies.

The past decade has encountered a new revolutionary technology that seems to have many applications across the entire industry. This innovative technology is called Artificial Intelligence that has been driving Business Intelligence to a different level, considering any business operation with a magnitude of incoming data to be analyzed. Day-to-day of these business operations with a share volume of data (i.e., Big Data) requires augmentation of AI in conjunction with High-Performance Computing (HPC). Artificial Intelligence (AI) is intelligence demonstrated by machines, unlike the natural intelligence displayed by humans and animals. In other words, AI that is the new buzzword of the market of technology is the science of making machines as smart and intelligent as humans as an ultimate goal, to the point that we go from a weak AI to Super-AI.

Such progression within the domain of AI by definition is the computer algorithm or program's ability, particularly in High-Power Computing (HPC) or machine, to think and learn very similar to the human being. mAI is a technology that is transforming every walk of life. It is a wide-ranging tool that enables people to rethink how we gather information, analyze the data, and utilize the resulting insight to have a better-informed decision. These days, AI is essential since the amount of data generated by humans and machines far outpaces humans' ability to absorb and interpret the data and make complex decisions based on that data.

AI is increasingly a part of our everyday environment in systems including virtual assistants, expert systems, and self-driving cars. Nevertheless, the technology is still in the early days of its development. Although they may vary in terms of their abilities, all current AI systems are examples of weak AI. The field of artificial intelligence moves fast. Progress in this field has been breathtaking and relentless. Five years from now, the field of AI will look very different than it does today. It is getting smarter while It is the best at everything — mathematics, science, medicine, hobbies, you name it. Even the brightest human minds cannot come close to the abilities of super AI.

With this basic understanding of AI, there are certain key factors one should know about AI (Figure 3)

1. It is essential to distinguish different types of Artificial Intelligence and different phases of AI evolution when it comes to developing application programs.

2. Without recognizing the different types of AI and the scope of the related applications, confusion may arise, and expectations may be far from reality.

3. In fact, the "broad" definition of Artificial Intelligence is "vague" and can cause a misrepresentation of the type of AI that we discuss and develop today.

To understand How Artificial Intelligence works, one needs to deep dive into the various sub-domains of Artificial Intelligence and understand how those domains could be applied to the industry's various fields.

Figure 3: The Pyramid of AI, ML and DL.

Machine learning is the branch of artificial intelligence that holistically addresses to build computers that automatically improve through experience. Indeed, machine learning is all about the knowledge from the data. It is a research field at the intersection of statistics, artificial intelligence, and computer science and is also known as predictive analytics or statistical learning. Indeed, machine learning's main idea is that it is possible to create algorithms that learn from data and make predictions based on them. Recent progress in machine learning has been driven by developing new learning algorithms and theory and the ongoing explosion in online data availability and low-cost computation.

With immense data growth, machine learning has become a significant and key technique in solving problems. Machine learning finds the natural pattern in data that generates insight to help make better decisions and predictions. It is an integral part of many commercial applications ranging from medical diagnosis, stock trading, energy forecasting, and many more.

Consider the situation when we have a complicated task or problem involving many data with lots of variables but with no existing formula or equation. Machine learning is part of a new employment dynamic, creating jobs that center around analytical work augmented by Artificial Intelligence (AI). Machine Learning (ML) provides smart alternatives to analyzing vast volumes of data. ML can produce accurate results and analysis by developing fast and efficient algorithms and data-driven models for real-time data processing.

Deep Learning (DL) is the subset of machine learning that, on the other hand, is the subset of artificial intelligence. The structure of the human brain inspires deep learning. Deep learning algorithms attempt to draw similar conclusions as humans would by continually analyzing data with a given logical structure. To achieve this, deep learning uses a multi-layered structure of algorithms called neural networks. Just as humans use their brains to identify the patterns and classify the different types of information, neural networks can be taught to perform the same data tasks.

Whenever humans receive new information, the brain tries to compare it with known objects. Deep neural networks also use the same concept. By using the neural network, we can group or sort the unlabeled data based on similarities among the samples in the data. Artificial neural networks have unique capabilities that enable deep learning models to solve tasks that machine learning models can never solve.

One of the main advantages of deep learning lies in solving complex problems that require discovering hidden patterns in the data and/or a deep understanding of intricate relationships between a large number of interdependent variables. When there is a lack of domain understanding for feature introspection, Deep Learning techniques outshine others, as you have to worry less about feature engineering. Deep Learning shines when it comes to complex problems such as image classification, natural language processing, and speech recognition.

Artificial intelligence has been an increasingly growing area for many decades now, not just within itself where the areas of Machin learning, Deep learning, and artificial neural network work simultaneously, but also in the number of fields and industries that they are now prevalent in. Nanoscience and nanotechnology are the study and application of tiny things. there are some growing areas where AI converges with nanotechnology.

During the last decade, there has been increasing use of artificial intelligence tools in nanotechnology research. In this paper, we review some of these efforts in the context of interpreting scanning probe microscopy, simulations, and nanocomputing.

Atomic force microscopy is the most versatile and powerful microscopy technology used to study different samples at the nanoscale. It is considered versatile since it can image in three-dimensional topography and provides various surface measurements for scientists' and engineers' needs. Although atomic force microscopy is considered a significant advance in recent years, it has the challenge to get high quality-signals imaging devices. The predominant problem in atomic force microscopy is that many of the tip-sample interactions these microscopes rely on are complex, varied, and therefore not easy to decipher, especially when trying to image samples at the nanoscale and manipulate atomic level.

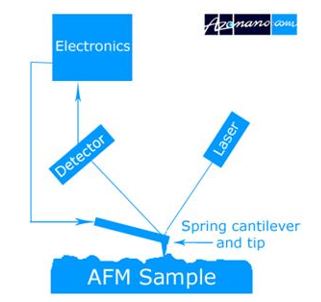

The AFM uses the cantilever with a very sharp tip to scan over the sample surface (Figure 4) as the tip approaches the surface, the attractive close-range force between the surface and the tip makes the cantilever deflect toward the surface. However, as the cantilever is brought closer to the surface such that the tip makes contact with it increasingly, the repulsive force takes over and causes the cantilever to deflect away from the surface.

A laser beam is used to detect the cantilever deflection toward or away from the surface by reflecting an incident beam of the cantilever's flat top. Any cantilever deflection will cause slight changes in the deflection of the reflected beam. A Position-Sensitive Photodiode (PSPD) is used to track this change. Thus, if an AFM tip passes over the raised surface feature, the resulting cantilever deflection and the subsequent change in the reflected beam's direction are recorded by the PSPD.

The AFM images the topography of a sample surface by scanning the cantilever over the region of interest. The raised and lowered features on the sample surface impact the cantilever's deflection, which is monitored by the PSPD. Using the feedback loop to control the height of the tip above the surface thus maintains a constant laser position. The AFM can generate an accurate topographic map of the surface features.

AI can be beneficial in dealing with these kinds of signal-related issues. The AI approach, known as functional recognition, is used to address this issue by directly identifying local actions from measured spectroscopic reactions. Artificial Neural Network’s (ANN's) can recognize the local behavior of the material being imaged, leading to a simplification of the data and a reduction in the number of variables that need to be considered. Overall, it leads to a much more efficient imaging system.

Figure 4: The AFM Principle.

Simulation of the system is one of the main problems scientists must face while working at the nanoscale. The nanoscopic and microscopic image difference is that the real optical images cannot be obtained at the nanoscale, so the images in this scale need to be interpreted, and numerical simulations are mostly the best solutions.

A number of applications and programs are used to simulate the systems where the atomic effects are present accurately. If these applications are correctly employed, these techniques would be instrumental in getting the precise and valuable idea of what is present in the image. But in many cases, it can be a complicated task to utilize, and many parameters need to be taken into account to achieve an accurate representation of the system. In this case, AI can be used to develop the simulations' quality and make them much easier to obtain and interpret.

The second application of ANNs in simulation software is to reduce the complexity associated with its configuration. In many numerical simulation methods, many parameters must be fitted to create the optimal configuration for an accurate simulation of the system. Most of these parameters are related to the system's physical geometry and must be known by the user. However, other parameters are only related to the algorithms used in the simulation, and only users with expertise in numerical methods can correctly manage them. This fact strongly reduces the usability of the software. ANNs have been proposed to be included in the simulation packages to find an adequate configuration automatically to overcome this problem [ 5,6].

Nano computing describes computing that uses extremely small or nanoscale devices; indeed, it is a computer that its dimensions are microscopic. Electronic nano computers would operate like the way present-day microcomputers work. The main difference is the physical scale. More and more transistors are squeezed into silicon chips with each passing year.

Unsurprisingly, AI is also beneficial concerning the future of nano computing, which is computing conducted through nanoscale mechanisms. There are many ways nano computing devices can execute a function, and these can cover anything from physical operations to computational methods. Due to a great deal of these devices depending on intricate physical systems to allow for intricate computational algorithms, machine learning procedures can be used to generate novel information representations for a broad range of uses.

In today’s technology world, through the Internet of Things (IoT), our world is more connected than ever, with billions of IoT devices are communicating among each other with the speed of electron and your internet connectivity. These IoT devices are at the edge of collectively generating a tremendous amount of data coming via omni-direction and iCloud for processing. These massive and share volume of data at the level of Big Data (BD) are then transferred to and stored in cloud data centers all over the globe that we are living on. Repository of these data from a historical point of view needs to be compared with fast paced incoming data in order to prevent any means of duplications, in particular, when these data are in the form of structured and unstructured format, thus we can trust them for our data warehousing and data analytics. We need data to increase our information and consequently to be knowledgeable, in order to make the power to make a decisive business decision [7,8].

For us to be more resilient and be able to make an efficient Business Resilience System (BRS) around our organization, we need integration of Artificial Intelligence (AI) and computing power system such as High-Performance Computing (HPC) in hand. Insights from the collective data and the way they change second-by-second, we have to rely on HPC processing performance and AI that informs the dashboard screen via its Machine Learning (ML) and Deep Learning (ML). These combined resilience systems allow us to do business, interact with people, and live our daily lives, particularly in the tiny future world of nanoscience and nanotechnology.

Generation of these data insights typically occurs by processing the data through Neural Networks [6] to recognize patterns and categorize the information. Training these neural networks is extremely compute-intensive and requires high-performance system architectures. While CPUs are the work horses in the data center, the highly repetitive and parallel nature of artificial intelligence workloads can be more optimally handled with a mix of computing devices.

Of course, building a supercomputer and owned them is very costly and takes sums of amount of money, thus your Total Cost of Ownership (TCO) and Return on Investment (ROI) needs to be justified by your business nature. HPC not only takes good sums of money but requires highly specialized experts to operate them as well as using them, and they are only suitable for specialized problems that we encounter in small scale world of nanotechnology to be able to see atom as Professor Richard Feynman said. During his talk in 1959 [1]. As we have seen so far and describing the AI, ML, and DL architecture above, we need to have some holistic understanding of high-performance computing/ computer, which we can put in the following phrase.

High Performance Computing most generally refers to the practice of aggregating computing power in a way that delivers much higher performance than one could get out of a typical desktop computer or workstation in order to solve large problems in science, engineering, or business.

The point of having a high-performance computer is so that the individual nodes can work together to solve a problem larger than any one computer can easily solve. Just like people, the nodes need to be able to talk to one another to work meaningfully together. With this bare in your mind that “How Artificial Intelligence is Driving Innovation”.

With the progress and thriving of Artificial Intelligence (AI) and Nanotechnology curves are both on ascending slope, it seems these two curves have narrowed to each other to the point that we can recognize a separation span between them. This indicates that they have two complements each other and be the right partner and companion when it comes to both these industries. In today’s technology of artificial intelligence and nanoscience/nanotechnology, it seems their integration of these is inevitable scenario.

“The debate about 'converging technologies' is part of a more comprehensive political and social discourse on nanotechnology, biotechnology, information and communications technology (ICT), brain research, artificial intelligence (AI), robotics, and the sciences that deal with these topics. Convergence is an umbrella term for predictions ranging from an increase in synergetic effects to a merging of these fields, and for demands for government funding of research and development where these fields overlap (read more in our Nanowerk Spotlight: The debate about converging technologies)” [5].

Whenever we are speaking about nanoengineering or nanotechnology, we can think of science and knowledge of physics, chemistry, and other engineering fields that are dealing with small scale things such as molecule, atom, etc. that number of data that gets collected from any research or application for better information from these fields, we have no choice except to rely on AI and consequently ML and DL. Also, for us, we can think of a new generation of AI that is known as Super Artificial Intelligence (SAI); we are relying on biological science and inspiration to develop some of its most effective paradigms, such as Neural Networks (NNs) or evolutionary algorithms [6].

Bridging the link between current nanoscience’s and AI can boost research in these disciplines and provide a new generation of information and communication technologies that will have an enormous impact on our society, probably providing the means to merge technology and biology. Along with AI and Nanotechnology collaboration, they are in need of High-Performance Computing (HPC) that will be both useful to AI from data analytics and data.

predictive analyses are concerned through AI sub-sets of Machine Learning (ML) and Deep Learning (DL). HPCs are playing a big role in Nano-Computing as well, since with deal with a lot of small-scale size of things in our application of nanotechnology and nanoengineering. Although many efforts have been made in the last years to improve the resolution and the ability to manipulate atoms, the microscope signal's interpretation is still a challenge. The main problem is that most of the tip-sample interactions are not easy to understand and depend on many parameters. These are the kinds of problems where methods from artificial intelligence can be beneficial [5]. As a concluding note, Nanotechnology is the future of small scale that needs Artificial Intelligence to enhance its data analytics for collecting information and knowledge to give us the power to be more decisive [7].

Translation Elongation Factors: are Useful Biomarkers in Cancer? by Cristiano Luigi* in Open Access journal of Biogeneric Science and Research (JBGSR)

The Eukaryotic Translation Elongation Factors are a large protein family involved in the elongation step of eukaryotic translation but it has also various moonlight functions inside the cell both in normal and in pathological conditions. The proteins included in this family are EEF1A1, EEF1A2, EEF1B2, EEF1D, EEF1G, EEF1E1, enclosed their various isoforms, i.e. PTI-1, CCS-3, HD-CL-08, and MBI‐eEF1A. They are proteins all bound to cancer development and progression and show gene amplification, genomic rearrangements, and alteration of expression levels in many kinds of cancers. These abnormalities have undoubtedly repercussions on cellular biology and cellular behaviour in the various step of transformation and progression of cancer but surely should be considered also for the enhancement of invasiveness and for the metastasis. Thus, the Eukaryotic Translation Elongation Factors may possibly useful biomarkers for human cancers although more studies are needed to better elucidate their exact contribution as diagnostic, prognostic, and progression markers.

Keywords: Eukaryotic translation elongation factors; translation; cancer; biomarker; EEF1A1; EEF1A2; EEF1B2; EEF1D; EEF1G; EEF1E1; EEF1H; PTI-1; EEF1A1L14; CCS-3; MBI‐eEF1A; HD-CL-08

Abbreviations: Alpha EEFs: Alpha 2. Eukaryotic Translation Elongation Factors; CCS-3: Cervical Cancer Suppressor 3; EEFs: Eukaryotic Translation Elongation Factors; eEF1A1: eukaryotic Translation Elongation Factor 1 alpha 1; eEF1A1L14: Eukaryotic Translation Elongation Factor 1-alpha 1-like 14; eEF1A2: eukaryotic translation Elongation Factor 1 alpha 2; eEF1B2: eukaryotic translation Elongation Factor 1 beta 2;eEF1D: eukaryotic translation Elongation Factor 1 delta; eEF1G: eukaryotic Translation Elongation Factor 1 gamma; eEF1E1: eukaryotic translation Elongation Factor 1 epsilon 1; eEF1H: eukaryotic translation Elongation Factor-1 macromolecular complex; HD-CL-08: cutaneous T-cell lymphoma antigen (similar to eEF1A1); MARS: Multiaminoacyl-tRNA synthetase macromolecular complex; MBI‐eEF1A: More Basic isoform of eEF1A; Non-alpha EEFs: not-alpha eukaryotic translation Elongation Factors;PTI-1: Prostate Tumor-inducing gene-1.

Translation is one of the most important biological processes that take place into the cell because it permits genetic information to become functional proteins. It is formally divided into three main processes between them consequential: an initiation step, an elongation step, and a termination step. The Eukaryotic Translation Elongation Factors (EEFs) are a large protein family that plays a central role in the peptides’ biosynthesis during the elongation step of translation. This family counts different proteins and their isoforms and it is conventionally divided into two main subgroups: the Non-Alpha Eukaryotic Translation Elongation Factors (Non-alpha EEFs), that comprise the Eukaryotic Translation Elongation Factor 1 Beta 2 (eEF1B2), the Eukaryotic Translation Elongation Factor 1 Delta (eEF1D), the Eukaryotic Translation Elongation Factor 1 Gamma (eEF1G), and the Eukaryotic Translation Elongation Factor 1 Epsilon 1 (eEF1E1), including their isoforms, and the Alpha Eukaryotic Translation Elongation Factors (Alpha EEFs), that include the Eukaryotic Translation Elongation Factor 1 Alpha 1 (eEF1A1), Eukaryotic Translation Elongation Factor 1 Alpha 2 (EEF1A2), and their isoforms like the Prostate Tumor-Inducing Gene-1 (PTI-1), more recently renamed Eukaryotic Translation Elongation Factor 1-Alpha 1-Like 14 (EEF1A1L14), a more basic isoform of eEF1A1 (MBI‐eEF1A) , a cutaneous T-cell lymphoma antigen similar to eEF1A1 (HD-CL-08), and Cervical Cancer Suppressor (CCS-3).

The members of EEFs form a supramolecular complex named Eukaryotic Translation Elongation Factor-1 Macromolecular Complex (eEF1H) except for eEF1E1 that is a key component of another supramolecular complex, i.e. Multi-Aminoacyl-tRNA Synthetase Macromolecular Complex (MARS). eEF1H protein complex plays a central role in peptide elongation during eukaryotic protein biosynthesis, in particular for the delivery of aminoacyltRNAs to the ribosome mediated by the hydrolysis of GTP. In fact, during the elongation step of translation, the inactive GDP-bound form of eEF1A (eEF1A-GDP) is converted to its active GTP-bound form (eEF1A-GTP) by eEF1B2GDcomplex through GTP hydrolysis. Thus, eEF1B2GD-complex acts as a guanine nucleotide exchange factor (GEF) for the regeneration of eEF1A-GTP for the next elongation cycle [1- 3] (Figure 1).

FIGURE 1: The elongation step of translation. The active form eEF1A, in complex with GTP, delivers an aminoacylated tRNA to the A site of the ribosome. Following the proper codon-anticodon recognition the GTP is hydrolyzed and the inactive eEF1AGDP is released from the ribosome and then it is bound by the eEF1B2GD complex forming the macromolecular protein aggregate eEF1H. eEF1H is formed previously by the binding of three subunits: eEF1B2, eEF1G, and eEF1D. This complex promotes the exchange between GDP and GTP to regenerate the active form of eEF1A [1;4-8].

MARS protein complex, on the contrary, is formed by nine aminoacyl-tRNA synthetases (AARSs) and at least other three auxiliary non-synthetase protein components, [4-8] among which appears eEF1E1 [9-11]. eEF1E1 seems to contribute to the interaction and anchorage of MARS complex to EF1H complex during the elongation step of translation [11,12]. The EEFs show also multiple noncanonical roles, called moonlighting roles, inside the cell and they are all often altered in many kinds of cancer. Genomic rearrangements, gene amplification, novel fusion genes, point mutations, chimeric proteins, and altered expression levels were detected in many types of cancers and in other human diseases [13]. This wide spectrum of alterations for EEFs, common also for other genes and proteins, is frequently in cancer cells that are genetically more unstable respect to normal ones. Certainly, these abnormalities have repercussions on cellular biology and cellular behaviour in the various step of the malignancy transformation and progression. In the context of protein biosynthesis, the elongation step is doubtless accelerated and most likely loses fidelity and the control mechanisms fail or are less efficient. This can affect the worsening of the malignancy with direct and/or indirect repercussions on the progression of cancer, including the increase in invasiveness and, finally, in the metastasis. The purpose of this work is to briefly summarize the main studies in which the role of these proteins in tumor transformation has been identified in order to be able to start from here for further studies and analyzes.

Non-alpha EEFs include most of the proteins that contribute to the translation elongation step in eukaryotes. These are eEF1B2, eEF1D, eEF1G, eEF1E1, and their isoforms. Here it will deal exclusively with the main proteins, excluding the isoforms because the studies on cancer are in their infancy for them. The expression levels for each member are shown in Table 1.

Table 1: List of EEFs and some of their most important isoforms with their expression levels in cancers.

EEF1B2, also known as EEF1B1 or eEF1β or eEF1Bα, was identified for the first time by Sanders and colleagues in 1991 [14]. It is the smallest subunit of EEF1H complex and among his moonlight roles are counted the control on the translation fidelity [2], the inhibition of protein synthesis in response to stressors, and the interaction with the cytoskeleton [15,16]. Gene expression for EEF1B2 was observed to be altered in many cancer types, in fact, it is frequently found overexpressed. Furthermore, EEF1B2 counts various kinds of genomic translocations and numerous fusion genes [1] [17-50].

EEF1D, also known as eEF1Bdelta, was identified for the first time by Sanders and colleagues in 1993 [50]. Four isoforms were detected, produced by alternative splicing: isoform 1, also called eEF1DL, of 647 residues, and isoform 2, of 281 amino acids, are the better known [51]. Its moonlight roles included its role as a transcriptional factor and its involvement in the stress response [51-54]. It is involved in a very large number of genomic translocations (and fusion genes) in different kinds of tumors and it was frequently found overexpressed. It was demonstrated that an increase in its expression level has an oncogenic potential with resulting in cell transformation [55]. Therefore, it is considered a cellular proto-oncogene [56].

EEF1G, also known as eEF1γ or eEF1Bγ, was identified for the first time by Sanders and colleagues in 1992 [57]. There are known two isoforms produced by alternative splicing: isoform 1 (chosen as canonical), by 437 residues, and isoform 2, of 487 amino acids [58]. Its moonlight roles included the interaction with the cytoskeleton [27,59] and some nuclear and cytoplasmic proteins, such as RNA polymerase II [60], TNF receptor-associated protein 1 [61] and membrane-bound receptors [62]. In addition, it has mRNA binding properties [60-63] and it is a positive regulator of the NF-kB signalling pathway [64]. It is involved in some genomic translocations (and fusion genes) in different kinds of tumors and it was frequently found overexpressed.

EEF1E1, also known as p18 or AIMP3, was identified for the first time by Mao and colleagues in 1998 [65]. It is the smallest component of the MARS complex [66] and it has various moonlight roles inside the cell: it seems to play a role in mammalian embryonic development [67], in the DNA damage response [68], and in the degradation of mature Lamin A [69]. A great number of mutations in the genomic sequence and in the amino acid sequence for EEF1E1 were discovered in cancer cells as well as genomic translocations, novel fusion genes, and altered expression levels.

Biogas can be explosive when mixed in the ratio of one part biogas to 8-20 parts air. Special safety precautions have to be taken into consideration for entering an empty biogas digester for maintenance work. The bio-gas system should never have a negative pressure as this could cause an explosion. Negative gas pressure can occur if too much gas is removed or leaked.

EEF1A1, or EEF1α or EF-1α, is one of the most studied translation elongation factors. It is present in almost all cell types, with some exceptions, as it plays a key role in the translation process, i.e. in eukaryotes, it promotes the binding of aminoacyl-tRNA (aa-tRNA) to the 60S subunit of the ribosome during the elongation process of the protein synthesis. To carry out its function it consumes a molecule of GTP, becoming inactive, and therefore needs to be recharged in its active form by the eEF1B2GD complex [2,3]. It has various moonlight functions, including cytoskeleton remodelling [70], promotion of misfolded protein degradation [71], control of the cell cycle [72], and the promotion of apoptosis [72]. EEF1A1 is often amplified and overexpressed in cancers [72]. This is related not only to its key role in protein synthesis but also to its many moonlight functions.

A more basic isoform of eEF1A1, alias MBI‐eEF1A, was identified for the first time by Dapas and colleagues [36] in human haematopoietic cancer cell lines. This finding opens the possibility that also the post-translation modifications of eEF1A1 could be related to cancer development and/or progression and should be deeply studied [73].

PTI-1 was identified for the first time by Shen and colleagues in 1995 [74] and it shows similarities to eEF1A from whose amino acid sequence it differs for having lost 67 amino acids at the N-terminal region. Initially considered a specific oncogene for prostate cancer, it later turned out to be unrelated but instead to be due to the infection of the cells by some species of bacteria belonging to the genus Mycoplasma, in particular Mycoplasma hyorhinis [75]. The exact role of PTI-1 is unknown but it has been suggested that it might reduce translational fidelity and so concur or bring to tumorigenesis [76].

CCS-3 was identified for the first time by Rho and colleagues in 2006 [43] and it shows similarities to eEF1A from whose amino acid sequence it differs for having lost 101 amino acids at the N-terminal region. Its expression level is found to be very low to undetectable in human cervical cancer cells while is it higher in normal human cell lines. The functions of CCS-3 are still poorly understood, however from the studies carried out to date it seems that it may play a role as a transcriptional repressor [43-77]. Furthermore, low levels of its expression appear to prevent apoptosis so it could have also an anti-tumor activity [43].

It was discovered that a cutaneous T-cell lymphoma antigen, named HD-CL-08, shows high sequence homology with eEF1A1, but it lacks 77 amino acids in the NH2-terminal portion [43-78].

EEF1A2 is analogous to EEF1A1 and its expression is normally found only in some tissues, where it completely replaces EEF1A1, i.e. adult brain, heart, and skeletal muscle [79]. It is not expressed in other tissues under physiological conditions. The study of the role of EEF1A2 in the tumor transformation process has been conducted in many tumors, but the most characterizing researches have been carried out in ovarian and breast cancers. EEF1A2, in fact, is considered to be a putative oncogene in ovarian cancer [46]. Like EEF1A1, EEF1A2 is also highly expressed in many cancer types and its amplification is related to a poor clinical prognosis and an increase in tumor aggressiveness [72].

The family of The Eukaryotic Translation Elongation Factors has been studied for over thirty years and although data on expression levels are controversial among the studies, the large number of research and publications in the literature suggests that EEFs participate actively in tumorigenesis and so they may possibly useful biomarkers for human cancers. What needs to be clarified and better defined in an incontrovertible way is in which phase of the evolution of cancer they can make the greatest contribution and have the greatest role, i.e. being able to use them as diagnostic, prognostic and progression markers, but not only. They should be studied and evaluated also as indicators for the risk assessment, screening, differential diagnosis, prediction of response to treatment, and monitoring of metastases.

For more Cancer articles in JBGSR Click on https://biogenericpublishers.com/

To know more about this article click on

https://biogenericpublishers.com/jbgsr.ms.id.00138.text/

https://biogenericpublishers.com/pdf/JBGSR.MS.ID.00138.pdf

For Online Submissions Click on https://biogenericpublishers.com/submit-manuscript/